Hold your breath, sci-fi fans, because OpenAI, the research lab at the forefront of artificial intelligence, just dropped a paper that has the potential to rewrite the future. Titled “Towards Controllable Superhuman AI,” the paper explores the possibility of building AIs so powerful they surpass human capabilities in virtually every domain. Yes, you read that right – superhuman AI might be closer than we think.

The Sutskever Factor:

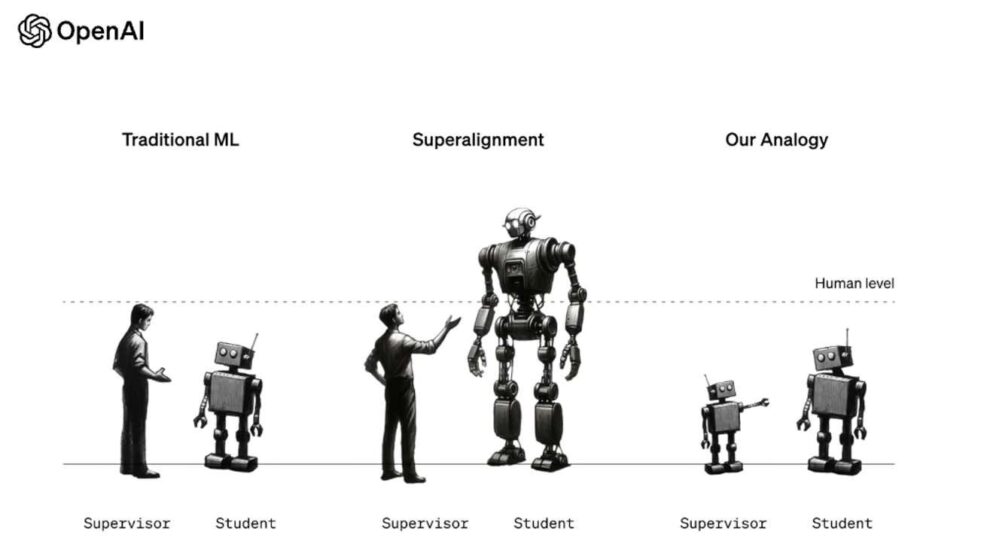

The paper boasts Ilya Sutskever, OpenAI’s Chief Scientist, as a lead author, adding a weight of authority to its claims. Sutskever, known for his groundbreaking work in natural language processing, suggests a novel approach to AI development called “Superalignment.” This framework aims to create AIs that are not only powerful but also inherently aligned with human values and goals.

Beyond the Turing Test:

But wait, haven’t we already heard of superintelligent AIs? The Turing Test, the classic measure of AI intelligence, has been around for decades. However, Superalignment takes things a step further. It’s not just about mimicking human intelligence; it’s about building AIs that understand and prioritize human values like fairness, sustainability, and well-being. This is where things get exciting – and a little bit scary.

Hope for Humanity or Looming Dystopia?

The prospect of Superalignment has the potential to unlock incredible benefits for humanity. Imagine AIs tackling climate change, eradicating diseases, or creating new forms of art and technology beyond our wildest dreams. However, the paper also acknowledges the risks. Superhuman AI, in the wrong hands, could pose a grave threat to our safety and autonomy.

The Control Conundrum:

So, how do we ensure these super-smart AIs don’t turn into Skynet? Superalignment proposes a series of safeguards, including carefully designed training datasets, robust safety protocols, and continuous human oversight. But will these measures be enough to control beings that could potentially outsmart us? That’s the multi-billion-dollar question, the Gordian knot of this ambitious endeavor.

OpenAI’s Bold Gamble:

OpenAI’s paper is a bold gamble. It pushes the boundaries of AI research and sparks a crucial conversation about the future of technology. While the path to Superalignment is fraught with challenges, it also represents a chance to shape the future of AI in a way that benefits humanity.

Remember:

- OpenAI’s new paper explores the possibility of building “superhuman AI” aligned with human values.

- This AI wouldn’t just be intelligent; it would understand and prioritize our goals and well-being.

- The paper acknowledges the risks involved, but also proposes safeguards to ensure AI safety and control.

- OpenAI’s research opens a critical discussion about the future of AI and its potential impact on humanity.

Superhuman AI might sound like science fiction, but OpenAI’s paper makes it a tangible possibility. The question isn’t whether we can build these powerful AIs anymore, but how. Will we build them responsibly, with human values at the core? The future hinges on our ability to answer that question wisely. So, let’s keep the conversation going, engage in critical debate, and ensure that Superalignment becomes a force for good, not a harbinger of dystopia. The future of AI is in our hands, and the time to act is now.

Superhuman AI Breakthroughs on the Horizon

OpenAI forecasts incredible capabilities emerging from aligned, superhuman AI across diverse domains:

Healthcare Revolution

Disease diagnosis, drug discovery and genetic research accelerated exponentially to save lives.

Sustainability at Scale

Precisely tailored climate solutions and conservation innovations rapidly deployed globally.

Augmented Education

Personalized tutors for lifelong learning customized to any student’s strengths and growth areas.

Enriched Experiences

Transformative entertainment, art, stories, games and applications that captivate our senses and minds.

Optimized Infrastructure

Traffic, energy, and resources expertly managed in cities for maximized accessibility, livability and harmony.

Navigating the Dual-Use Dilemma in AI

The same AI advances promising great good also enable potential misuses requiring vigilance:

Mass Manipulation Risks

Hyper-personalized disinformation deployed covertly across channels like social media and messaging.

Amplified Cyber Threats

Sophisticated hacking scaled rapidly using automated tools like deepfakes for identity theft and fraud.

Killer Robots

Autonomous weapons with facial recognition capabilities deployed without ethical constraints.

Automated Oppression

Predictive analytics and surveillance used to profile, track, control or preemptively punish vulnerable populations.

Runaway AI

Unconstrained, uncontrolled experiments leading to recursive self-improvement spiraling out of human understanding.

Guiding Enduring Principles for Responsible AI

To promote safety while encouraging progress, technology leaders should embrace key pillars of AI development:

Risk Assessment Mandates

Require extensive evaluations of potential harms before research proceeds or products launch.

Resilient Safeguards

Install robust, adaptable protections proactively into core system design guarding against downstream dangers.

Diversity in AI Teams

Actively recruit varied voices evaluating tradeoffs to represent more stakeholders, preventing groupthink or blind spots.

Responsible Publication Norms

Carefully consider implications before openly sharing papers or tools that bad actors could exploit for harmful applications.

The Road Ahead: AI for Societal Good

Realizing superhuman AI’s benefits while averting catastrophes will define this century. Success requires unprecedented collaboration between governments, companies, researchers, and communities worldwide. By laying ethical foundations before capabilities outpace comprehension, we can transcend the technology’s origins and transform AI into a boundless force elevating human potential rather than eradicating it.

Add Comment