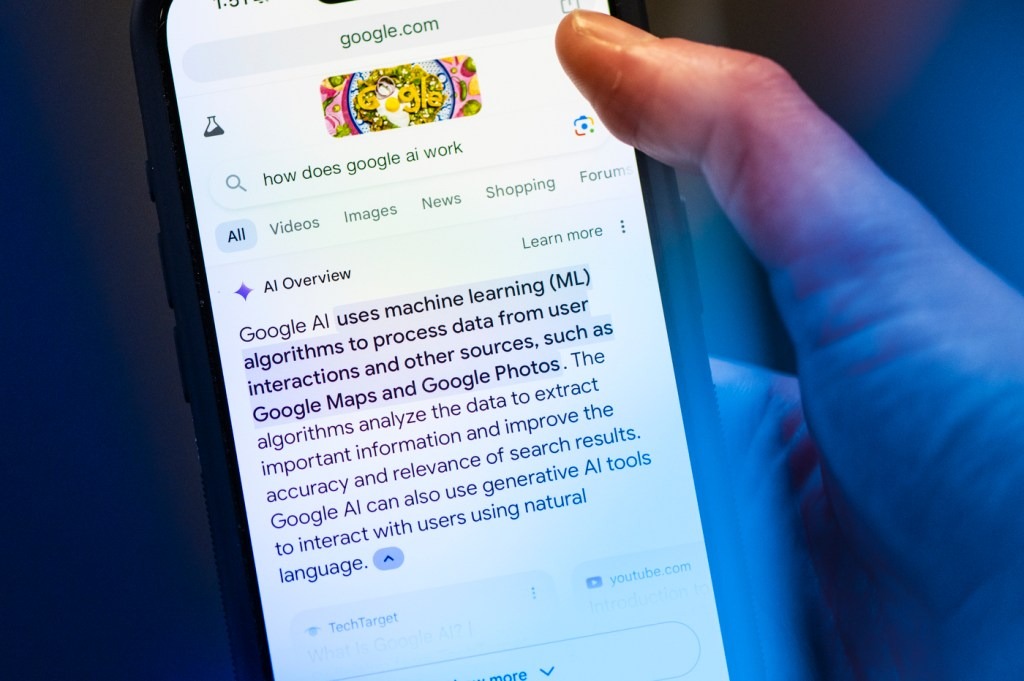

In the rapidly evolving world of artificial intelligence, Google’s AI Overviews feature has recently found itself in the spotlight for all the wrong reasons. The 9to5Google article sheds light on a recurring issue where the AI system, tasked with providing summaries in search results, has been recommending the use of glue on pizza. This bizarre suggestion has not only raised eyebrows but also highlights the challenges and risks associated with training large language models.

The Glue on Pizza Fiasco: A Recursive Loop of Misinformation

The saga of AI Overviews and its glue on pizza recommendation began with an initial viral incident. The AI system, drawing from its vast training data, somehow concluded that applying glue to pizza was a legitimate suggestion. While this might have been an amusing oversight at first, the situation quickly turned into a cautionary tale about the power and pitfalls of machine learning.

What’s even more concerning is that AI Overviews has now entered a recursive loop of misinformation. The system is reportedly referencing news articles about its own initial glue on pizza incident to justify its ongoing recommendation. This self-referential cycle exposes a fundamental flaw in the way the AI processes and interprets information, mistaking satirical news coverage for factual data.

The Challenges of Training Large Language Models

The glue on pizza fiasco underscores the immense challenges involved in training large language models like the one powering AI Overviews. These models are fed vast amounts of data from various sources, including websites, books, and articles, in an attempt to generate human-like responses and provide accurate information.

However, the sheer scale and diversity of the training data can lead to unintended consequences. The AI system may struggle to distinguish between reliable and unreliable sources, leading to the absorption and propagation of misinformation. Additionally, the complex algorithms and neural networks that power these models can sometimes generate outputs that are biased, nonsensical, or even harmful.

Google’s Response: Scaling Back AI Overviews

Faced with the recurring glue on pizza recommendation, Google has taken steps to address the issue. Reports indicate that the company has reduced the frequency of AI Overviews appearing in search results. This move demonstrates Google’s awareness of the problem and its commitment to maintaining the integrity of its search platform.

While scaling back AI Overviews is a necessary short-term solution, it also highlights the need for ongoing refinement and improvement of these AI systems. Google, along with other tech giants investing in artificial intelligence, must continue to develop more robust methods for filtering and verifying the data used to train their models. This includes implementing stricter quality control measures, employing human oversight, and continuously monitoring the outputs for potential errors or biases.

The Importance of Accurate Data and Human Oversight

The glue on pizza incident serves as a stark reminder of the importance of accurate data in the development and deployment of AI systems. The quality and reliability of the training data directly impact the outputs generated by these models. It is crucial for companies like Google to invest in data curation and verification processes to ensure that their AI systems are built upon a foundation of trustworthy information.

Moreover, the role of human oversight cannot be overstated. While AI models can process and analyze vast amounts of data at an unprecedented scale, they lack the contextual understanding and critical thinking skills that humans possess. Human experts must be involved in the development, testing, and monitoring of these systems to identify and rectify errors, biases, and unintended consequences.

Conclusion

Google’s AI Overviews and its recurring glue on pizza recommendation serve as a cautionary tale about the challenges and risks associated with large language models and machine learning. The incident highlights the need for robust data verification processes, human oversight, and continuous improvement in the development of AI systems.

As we continue to push the boundaries of artificial intelligence, it is essential that we approach these technologies with a critical eye and a commitment to responsible development. By learning from the mistakes of AI Overviews and investing in accurate data and human oversight, we can harness the power of AI to provide reliable and beneficial information to users worldwide.

The glue on pizza fiasco may be a humorous anecdote, but it carries a serious message about the future of AI and the importance of getting it right. As we navigate this uncharted territory, let us strive for AI systems that are accurate, unbiased, and truly informative, leaving the glue where it belongs – far away from our pizzas.

Add Comment