In a significant development that could reshape web security, new research claims that specially trained AI models can now match human-level performance in solving Google’s reCAPTCHA v2 challenges. This breakthrough, achieved by ETH Zurich PhD student Andreas Plesner and his colleagues, demonstrates a 100 percent success rate in bypassing these widely-used security measures, raising concerns about the effectiveness of current bot detection methods.

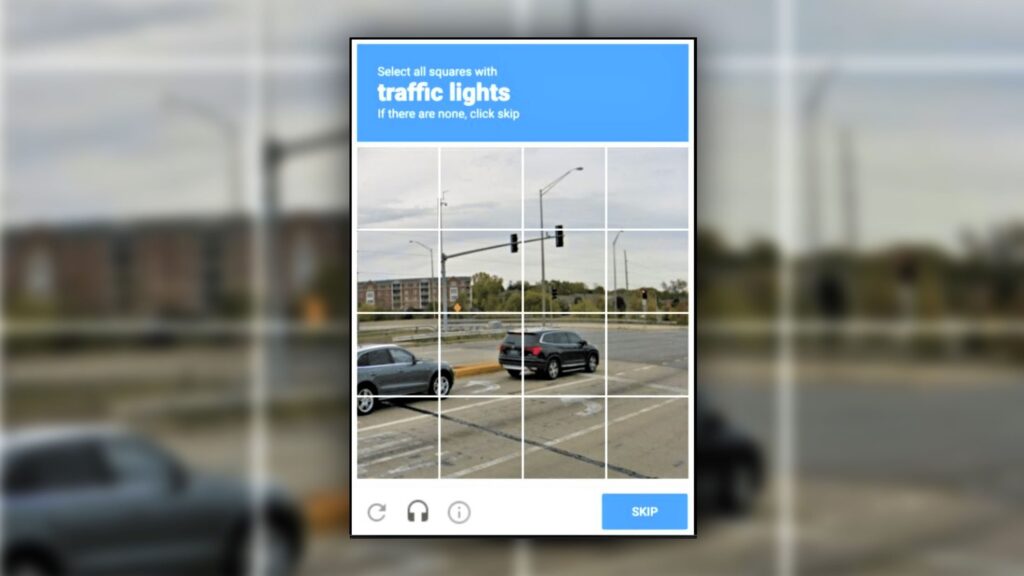

The CAPTCHA Challenge

CAPTCHAs (Completely Automated Public Turing test to tell Computers and Humans Apart) have long been a familiar sight for internet users. The reCAPTCHA v2 system, which asks users to identify specific objects within a grid of street images, has been a staple of web security for years. Despite Google’s efforts to phase out this system in favor of the “invisible” reCAPTCHA v3, millions of websites still rely on v2, either as a primary security measure or as a fallback option.

The AI Solution: YOLO Model

The researchers’ approach leverages a fine-tuned version of the YOLO (You Only Look Once) object-recognition model. This open-source AI system, known for its real-time object detection capabilities, was trained on 14,000 labeled traffic images to identify objects across reCAPTCHA v2’s 13 candidate categories. The team also developed a separate model for “type 2” challenges, which involve identifying object types within segmented images.

Key Features of the AI Solution:

- Utilizes the YOLO model, capable of running on devices with limited computational power

- Trained on a dataset of 14,000 labeled traffic images

- Can identify objects across all 13 reCAPTCHA v2 categories

- Employs a separate model for segmented image challenges

Implications for Web Security

The success of this AI system in bypassing reCAPTCHA v2 has several significant implications:

- Vulnerability of Current Systems: It highlights potential weaknesses in widely-used bot detection methods.

- Need for Advanced Security Measures: Web developers and security experts may need to accelerate the adoption of more sophisticated anti-bot technologies.

- Potential for Malicious Use: The research raises concerns about the potential for large-scale automated attacks on websites still using older CAPTCHA systems.

- Challenge to User Experience: As CAPTCHAs become less effective, websites may need to implement more intrusive or complex verification methods, potentially impacting user experience.

The Broader Context of AI and Cybersecurity

This breakthrough is part of a larger trend in the ongoing battle between security measures and increasingly sophisticated AI systems:

- AI in Cybersecurity: While AI is being used to enhance security measures, it’s also being leveraged to bypass them, creating a technological arms race.

- Ethical Considerations: The development of such AI systems raises questions about the ethical implications of creating tools that could potentially be used for malicious purposes.

- Future of Bot Detection: This research may accelerate the development of more advanced, AI-resistant verification methods.

- Impact on Web Accessibility: As CAPTCHAs become less reliable, there’s a need to balance security with accessibility for all users, including those with disabilities.

Looking Ahead: The Future of Web Security

As AI continues to advance, several key areas will be worth monitoring:

- Evolution of CAPTCHA Systems: How will Google and other providers adapt their systems to counter these AI advancements?

- Adoption of Alternative Technologies: Will we see a shift towards behavioral analysis or other forms of bot detection?

- Regulatory Responses: How might lawmakers and regulatory bodies respond to the increasing sophistication of AI in bypassing security measures?

- AI vs. AI Security Measures: Could we see the development of AI-powered security systems designed to detect and counter AI-based attacks?

Conclusion

The achievement of a 100% success rate in bypassing reCAPTCHA v2 marks a significant milestone in the field of AI and cybersecurity. While it demonstrates the impressive capabilities of modern AI systems, it also underscores the urgent need for more robust and adaptive security measures in the digital realm.

As we move forward, the challenge for security experts and web developers will be to stay ahead of these AI advancements, developing new methods to distinguish between human users and increasingly sophisticated bots. This ongoing technological arms race will likely shape the future of web security and user verification for years to come.

Related Articles:

- The Evolution of CAPTCHA: From Text to AI Challenges

- AI in Cybersecurity: Trends and Developments in 2024

- The Ethical Implications of AI Research in Security Bypass

External Resources:

Add Comment